In the dynamic world of artificial intelligence, three techniques stand out for optimizing AI models: prompt engineering, fine-tuning, and Retrieval-Augmented Generation (RAG). Each of these methods offers unique strengths, making them essential tools for anyone looking to supercharge their AI projects.

In this comprehensive guide, we’ll dive deep into the world of these three techniques in terms of their definitions, strengths, weaknesses, and real-world applications. By the end of this journey, you’ll have a clear understanding of how to leverage these powerful tools to optimize your AI models and create more efficient, accurate, and innovative solutions.

Understanding the Basics

To fully grasp the power of prompt engineering, fine-tuning, and RAG, it’s essential to understand their fundamentals. Each technique offers distinct advantages and is suited for different types of AI tasks.

Let’s take a closer look at each one, starting with prompt engineering.

Prompt Engineering: The Art of Asking

Prompt engineering is all about mastering the art of asking the right questions. By carefully crafting prompts, you can guide AI models to produce more accurate and relevant responses.

Think of it as being a master puppeteer but with words! This technique doesn’t require any special data, making it both cost-effective and accessible to anyone with a good understanding of the AI model and the task at hand.

When considering prompt engineering, there are some key points you may notice:

-

No special data required: Unlike other techniques, prompt engineering relies solely on your ability to create effective prompts. Therefore, you can try this technique without worrying about the investment budget or how to approach a specific data set.

-

Relies on understanding the AI model and task: A deep understanding of how the AI model works and what you want to achieve is crucial. This technique would require you to do proper iterative process to find which kind of prompts work the best for your task, and which LLM is the most suitable for your task type.

-

Cost-effective and accessible: With no need for extensive datasets or specialized training, prompt engineering is an economical choice for many projects.

Some common prompt engineering techniques:

-

Direct prompting (One-shot): Direct prompting involves providing a single, clear instruction to the AI model. This technique is straightforward and is often used for simple tasks where the desired outcome is clear and specific.

Suppose you want the AI to generate a summary of a text. A direct prompt might be: “Summarize the following article: [insert article text].”

-

Example-based prompting (Few-shot): Example-based prompting involves offering a few examples to guide the AI in generating responses. This technique helps the AI understand the desired format or style, which is useful for tasks such as generating creative content or completing sentences.

If you want the AI to write a product description, you might provide a few examples:

“Product: Smartwatch. Description: A sleek and modern smartwatch with heart rate monitoring and GPS.”

“Product: Wireless Earbuds. Description: Compact and high-quality earbuds with noise cancellation and long battery life.”

-

Chain-of-thought (CoT) Prompting: Chain-of-thought prompting encourages the AI to think step-by-step to arrive at a solution. This technique is particularly effective for complex tasks that require logical reasoning, such as mathematical calculations or logical reasoning exercises.

For example, if you want the AI to solve a math problem, you might guide it through the steps: “Solve the following problem step-by-step: If you have 5 apples and you give 2 to a friend, how many apples do you have left?”

Fine-tuning: Teaching an Old AI New Tricks

Fine-tuning is akin to sending your AI model to a fancy finishing school. While the model already has a wealth of general knowledge, fine-tuning allows you to retrain it on specialized data to tailor its responses to specific tasks or domains. This technique requires a well-curated dataset and can be resource-intensive, but the results are often worth the investment.

If you want to try fine-tuning techniques, here are some key points to consider:

-

Requires a well-curated dataset: Fine-tuning necessitates a carefully selected dataset that is specific to your task. Therefore, you may need to make an effort to find and invest in the datasets within your field.

-

Offers detailed customization: This technique allows for precise adjustments, enabling the model to perform exceptionally well in specialized areas. However, there is the risk of forgetting some of its general knowledge in favor of the specialized task.

-

Higher cost and resource-intensive: Fine-tuning demands significant computational resources and time, making it a more expensive option compared to prompt engineering.

RAG: The AI’s Personal Library

Retrieval-Augmented Generation, or RAG, is like giving your AI a VIP pass to the world’s coolest library. This technique combines the strengths of retrieval and generation by allowing the AI to access and incorporate external knowledge sources dynamically.

The result is a model that can provide more accurate and contextually relevant responses without requiring extensive retraining.

Here are some key points about this technique:

-

Works with easier-to-obtain knowledge sources: RAG leverages existing knowledge bases, such as Wikipedia or domain-specific corpora, making it more accessible than creating specialized datasets.

-

Combines retrieval and generation: This approach enhances the AI’s ability to generate responses by first retrieving relevant information from external sources.

-

Offers a balance between customization and accessibility: RAG provides a middle ground between the simplicity of prompt engineering and the detailed customization of fine-tuning.

Comparing the Trio: A Quick Guide

Each method offers unique benefits and is suited for different tasks and scenarios. Understanding their comparative strengths and weaknesses will help you choose the right approach for your specific AI project.

Whether you’re looking for a quick and cost-effective solution, detailed customization, or a balance between the two, let’s break down the key aspects of each technique to see which one suits you best:

|

Prompt Engineering |

Fine-Tuning |

RAG |

|

|

Strengths |

|

|

|

|

Weaknesses |

|

|

|

When to Use What: Real-World Scenarios

Choosing the right AI optimization technique can be a game-changer for your project. Each method—prompt engineering, fine-tuning, and Retrieval-Augmented Generation (RAG)—has its unique strengths and is suited for different scenarios.

To help you make an informed decision, let’s explore some real-world scenarios where each technique shines.

|

Prompt Engineering |

Fine-Tuning |

RAG |

|

|

Task Focus |

|

|

|

|

Data Availability |

No specific data is needed; just rely on task and model comprehension |

Needs a carefully selected dataset specific to the task |

Uses a knowledge source that is possibly more accessible than a specialized dataset. |

|

Computational Resources |

Minimal computational resources |

Computationally costly to train |

Retrieval and processing can be resource-intensive, but less so than fine-tuning |

OnlyPrompts: Your Solution to Optimized Prompts

Among the three techniques, if you find prompt engineering appealing for your AI projects, then OnlyPrompts is the perfect tool to consider.

Navigating the world of prompt engineering can be challenging, especially when you’re striving for optimal performance and efficiency. That’s where OnlyPrompts comes in. Our platform is designed to simplify and enhance the prompt engineering process, making it easier for you to create, test, and refine prompts for your AI models.

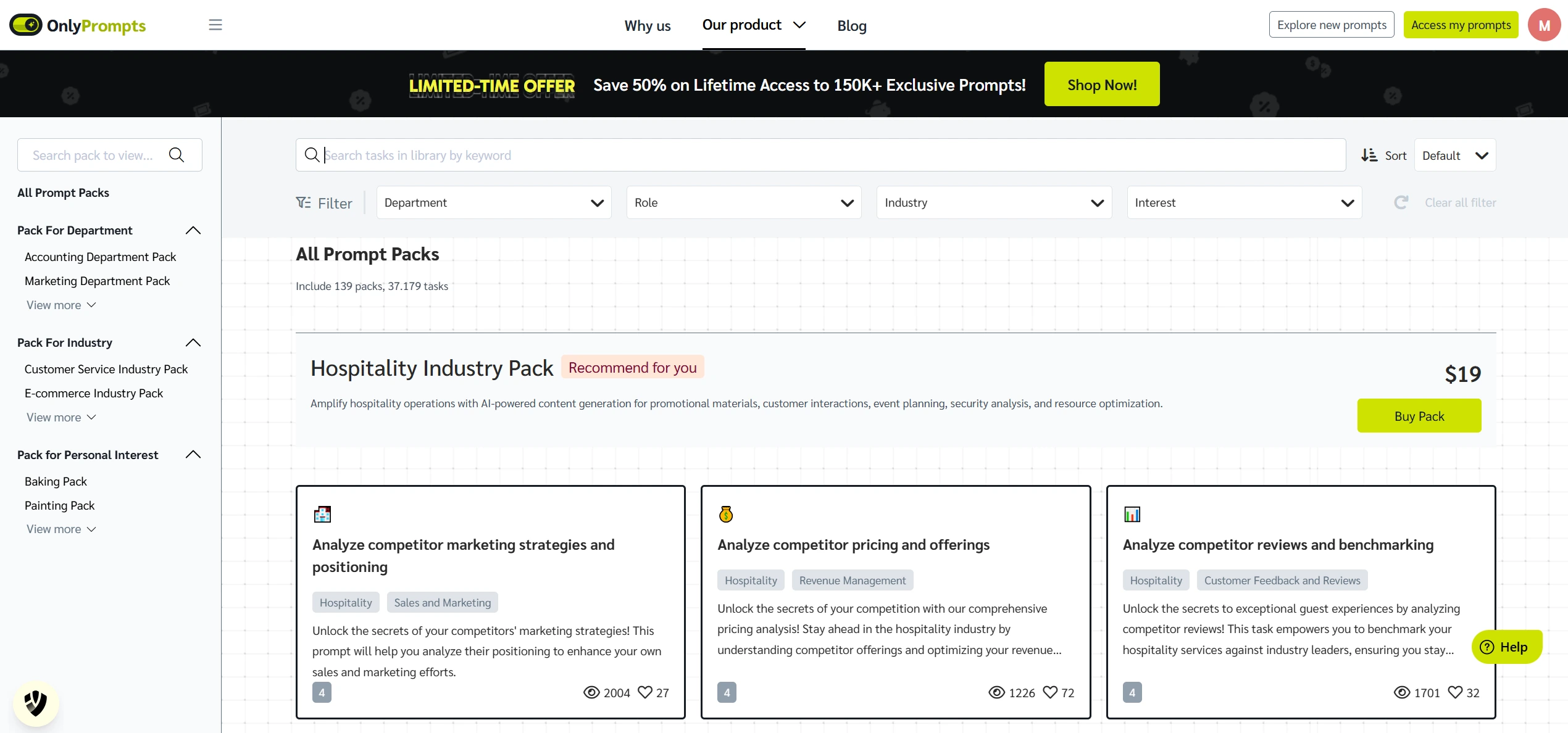

An Extensive Collection of over 150,000 Prompts

OnlyPrompts offers a vast library of over 150,000 prompt templates covering more than 37,000 tasks. Whether you’re working on a customer service chatbot, an educational tool, or a specialized industry application, you’ll find the prompts you need to get started quickly and efficiently.

What if I cannot find my needed prompts? - You may ask.

No problem! Leave a request, and our team will work on adding it to the library.

We’re here to assist you with your prompt engineering journey :’)

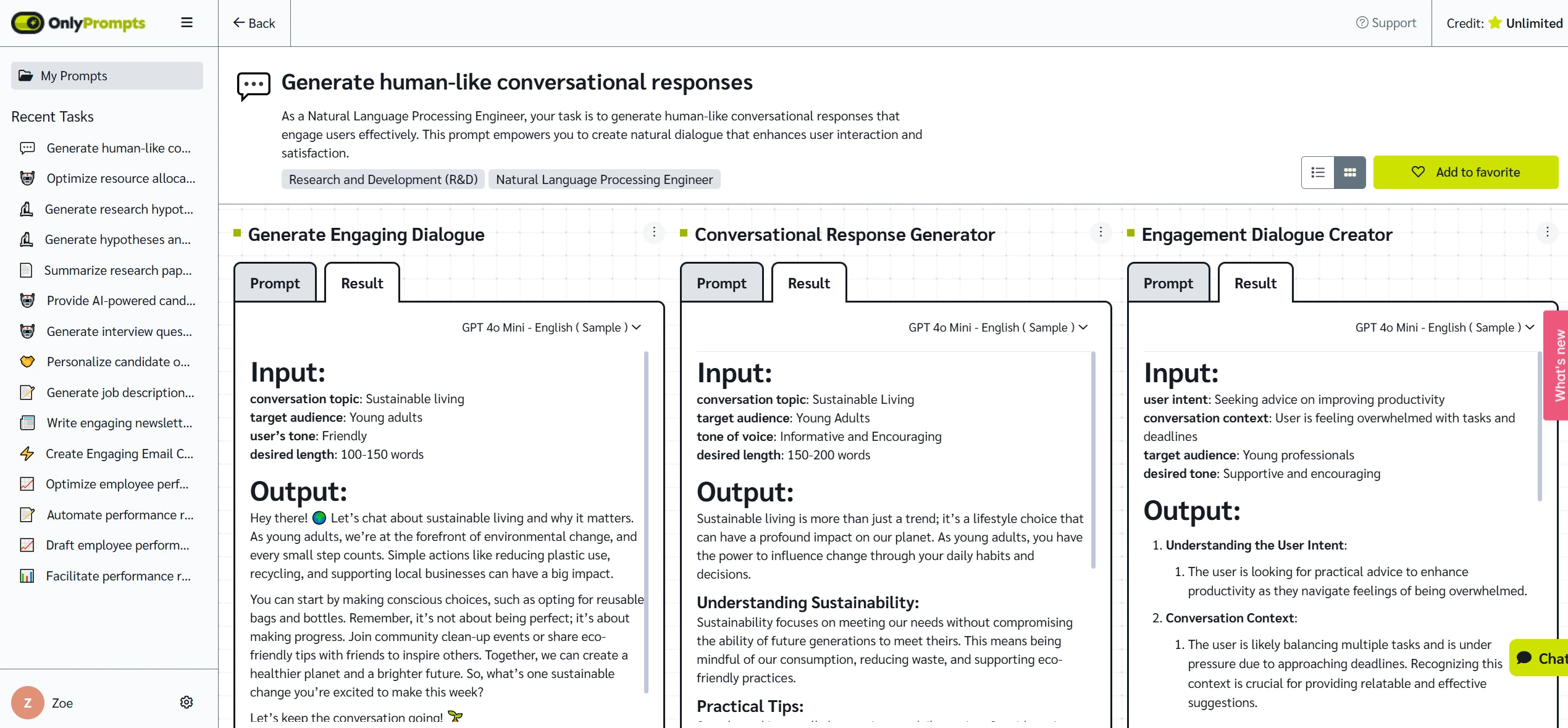

Iterate and Refine

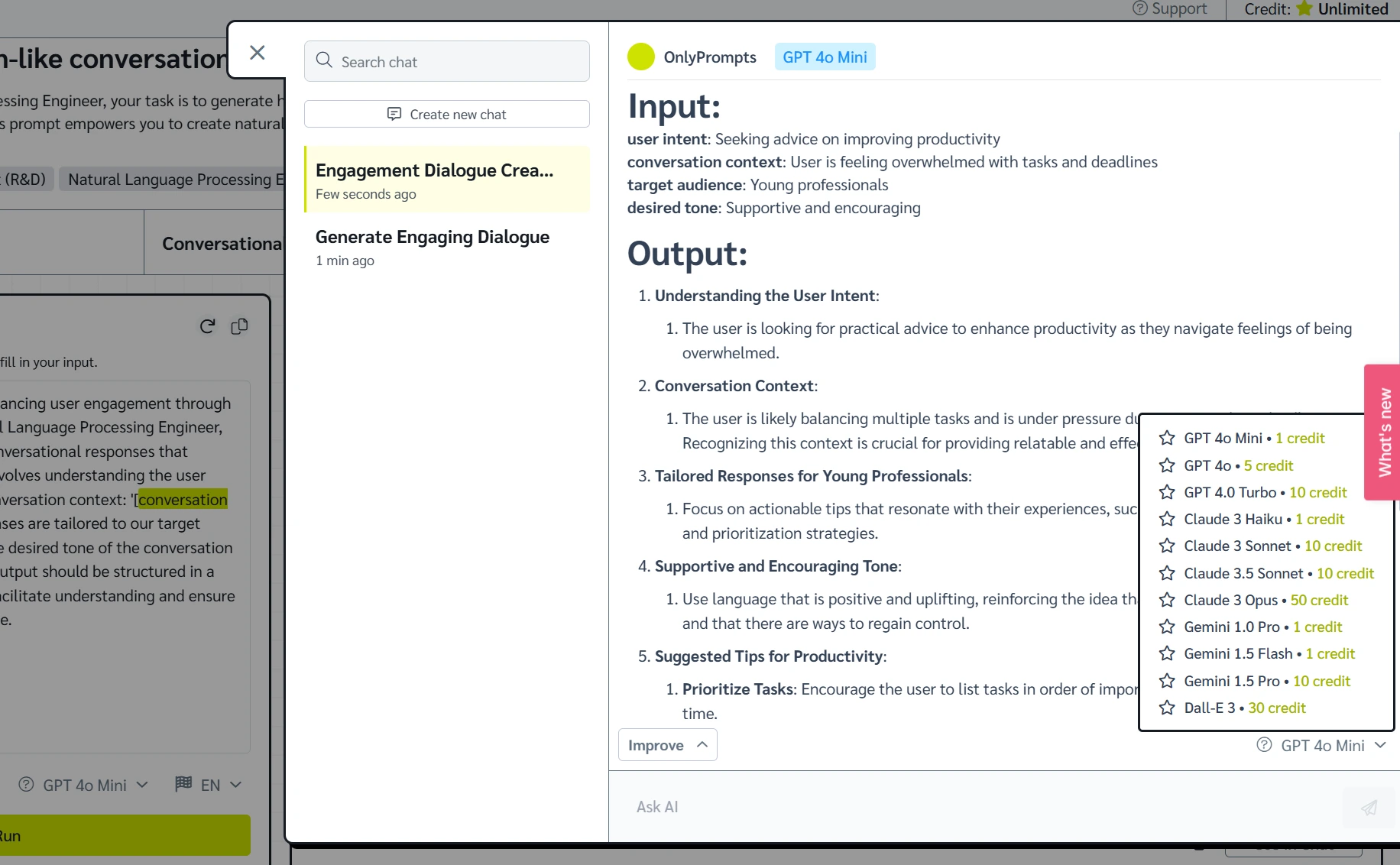

Prompt engineering often requires an iterative process to find the most effective prompts. With OnlyPrompts, you can test different prompt variants simultaneously with multiple LLMs, all within a single platform.

This streamlined process saves you time and effort, allowing you to compare results and choose the best-performing prompts without switching between different AI tools.

You can also test-run your prompts with different AI models like GPT, Claude, and more.

And here’s the cherry on top: once you’ve got your results, you can continue refining them by chatting with different AI models right within OnlyPrompts. No need to juggle multiple tabs or switch between different platforms – everything you need is in one place.

Time and Cost Efficiency

OnlyPrompts offers various pricing options, so there's a plan that fits your needs and budget whether you’re a freelancer or part of a massive team.

-

LTD (Lifetime Deal) plan: Perfect for those who like to commit long-term (and save big).

-

Subscription plan: For those who prefer to keep their options open.

-

Prompt pack option: Cherry-pick the prompt pack you need – prices start at just $19.

-

One-time credit: Dip your toes in without diving all the way.

Ready to boost your productivity without breaking the bank? Your next big breakthrough might be just a prompt away.

Final Thoughts

Understanding and leveraging the right techniques—prompt engineering, fine-tuning, and Retrieval-Augmented Generation (RAG)—can significantly enhance the performance and efficiency of your projects.

Prompt engineering offers a cost-effective and accessible way to guide AI responses, making it ideal for quick prototyping and general-purpose tasks. Fine-tuning allows for detailed customization by retraining models on specialized datasets, perfect for highly specialized applications. RAG combines retrieval and generation to provide up-to-date and contextually relevant responses, making it invaluable for tasks requiring current information.

If prompt engineering resonates with you, OnlyPrompts is the ultimate tool to streamline the process. Commence mastering these techniques and explore OnlyPrompts today!